There are a variety of possible application architectures that can satisfy the requirements of modern organizations. Most, however, are based on different applications of a simple technological concept, the client-server model.

A client-server network is a model where multiple computers (clients) connect to a central server to access resources, data, or services. This setup is widely used in organizations and across the internet because it centralizes resources, making management and maintenance easier. Here’s how it works:

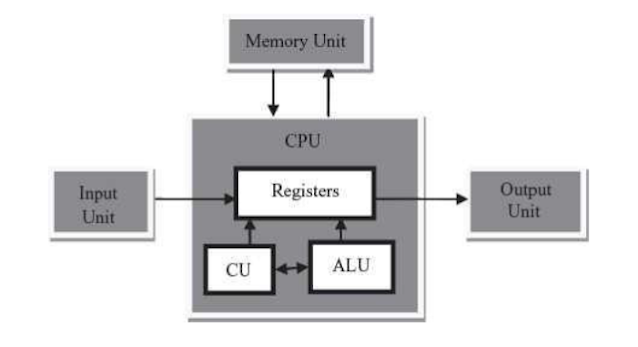

In a client-server configuration, a program on a client computer accepts services and resources from a complementary program on a server computer. The services and resources can include application programs, processing services, database services, Web services, file services, print services, directory services, e-mail, remote access services, even computer system initial startup service. In most cases, the client-server relationship is between complementary application programs. In certain cases, particularly for file services and printer sharing, the services are provided by programs in the operating system. Basic communication and network services are also provided by operating system programs.A single computer can act as both client and server, if desired

Key Components of Client-Server Networks

- Server: The server is a powerful computer or software that stores resources, processes data, and provides services. It "serves" clients by responding to their requests.

- Client: A client is a device, such as a computer, smartphone, or tablet, that connects to the server to access resources like files, databases, or applications. Clients initiate communication with servers.

- Network Infrastructure: The infrastructure, including routers, switches, and cables or wireless connections, enables communication between clients and servers.

How Client-Server Networks Work

Request-Response Model: Clients send requests to the server for resources or services. The server processes these requests and sends back the appropriate response.

The most familiar example of the use of client-server technology is the Web browser–Web server model used in intranets and on the Internet. In its simplest form, this model is an example of two-tier architecture. Two-tier architecture simply means that there are two computers involved in the service. The key features of this architecture are a client computer running the Web browser application, a server computer running the Web server application, a communication link between them, and a set of standard protocols, in this case, HTTP, for the communication between the Web applications, HTML for the data presentation requirements, and, usually, the TCP/IP protocol suite for the networking communications.

In the simplest case, a Web browser requests a Web page that is stored as a pre-created HTML file on the server. More commonly, the user is seeking specific information, and a custom Web page must be created ‘‘on the fly’’, using an application program that looks up the required data in a database, processes the data as necessary, and formats it to build the desired page dynamically. Although it is possible to maintain the database and perform the additional database processing and page creation on the same computer as the Web server, the Web server in a large Internet-based businessmay have to respond to thousands of requests simultaneously. Because response time is considered an important measure by most Web users, it is often more practical to separate the database and page processing into a third computer system.

The result, shown in Figure 2.8, is called a three-tier architecture.Note that, in this case, the Web server machine is a client to the database application and database server on the third computer. CGI, the Common Gateway Interface, is a protocol for making communication between the Web server and the database application possible.

In some situations, it is even desirable to extend this idea further. Within reason, separating different applications and processing can result in better overall control, can simplify system upgrades, and can minimize scalability issues. The most general case is known as an n-tier architecture.

Types of Servers in Client-Server Networks

There are different types of servers based on the services they provide, such as:

- File Server: Stores and manages files.

- Database Server: Stores and provides access to databases.

- Web Server: Serves websites to clients over the internet.

- Application Server: Runs applications and serves them to clients, especially for web apps.

- Email Server: Manages email communications within an organization.

Advantages of Client-Server Networks

- Centralized Control: Resources and data are managed in a central location, making it easier to maintain, update, and secure.

- Efficient Resource Sharing: Servers can handle multiple requests, enabling efficient resource sharing and avoiding redundancy.

- Enhanced Security: Centralized control allows for consistent security measures, user permissions, and data access rules.

- Scalability: Servers can be upgraded to handle more clients as needed.

Disadvantages of Client-Server Networks

- Cost: Setting up and maintaining servers can be costly due to the need for powerful hardware, software, and networking infrastructure.

- Dependency on Server: If the server fails, clients may lose access to resources, disrupting operations.

- Requires Specialized Management: Maintaining servers and securing a client-server network requires skilled IT personnel.

Example

A college network might use a client-server model where students (clients) connect to a central server to access files, educational software, or the internet. The server could manage access permissions, keep data secure, and apply updates.

In summary, client-server networks are efficient and manageable for medium to large-scale networks, ideal for organizations that require central resource management and security.

picture courtesy: quizlet.com

One of the strengths of client-server architecture is its ability to enable different computer hardware and software to work together. This provides flexibility in the selection of server and client equipment tailored to the needs of both the organization and the individual users. One difficulty that sometimes arises when different computers have to work together is potential incompatibilities between the application software that resides on different equipment. This problem is commonly solved with software called middleware. Middleware resides logically between the servers and the clients.

Typically,the middleware will reside physically on a server with other applications, but on a large system it might be installed on its own server. Either way, both clients and servers send all request and response messages to the middleware. The middleware resolves problems between incompatible message and data formats before forwarding the messages. It also manages system changes, such as the movement of a server application program from one server to another. In this case, the middleware would forward the message to the new server transparently. The middleware thus assures continued system access and stability. In general, the use of middleware can improve system performance and administration.

Web Based Computing

The widespread success of the World Wide Web has resulted in a large base of computer users familiar with Web techniques, powerful development tools for creating Web sites and Web pages and for linking them with other applications, and protocols and standards that offer a wide and flexible variety of techniques for the collection, manipulation, and display of data and information. Inaddition, a powerful website is already a critical component in the system strategy of most modern organizations. Much of the data provided for the website is provided by architectural components of the organization’s systems that are already in place.

Not surprisingly, these factors have led system designers to retrofit and integrate Web technology into new and existing systems, creating modern systems which take advantage of Web technology to collect, process, and present data more effectively to the users of the system.

The user of a Web-based system interacts with the system using a standard Web browser, enters data into the system by filling out Web-style forms, and accesses data using Web pages created by the system in a manner essentially identical to those used for the Internet. The organization’s internal network, commonly called an intranet, is implemented using Web technology. To the user, integration between the intranet and the Internet is relatively seamless, limited only by the security measures designed into the system.This system architecture offers a consistent and familiar interface to users; Web-enabled applications offer access to the organization’s traditional applications through the Web.Web technology can even extend the reach of these applications to employees in other parts of the world, using the Internet as the communication channel.

Since Web technology is based on a client-server model, it requires only a simple extension of the n-tier architecture to implement Web-based applications. As an example, Figure 2.9 shows a possible system architecture to implement Web-based e-mail.

Many organizations also now find it possible and advantageous to create system architectures that integrate parts of their systems with other organizations using Web technology and Web standards as the medium of communication. For example, an organization can integrate and automate its purchasing system with the order system of its suppliers to automate control of its inventory, leading to reduced inventory costs, as well as to rapid replacement and establishment of reliable stocks of inventory when they are needed.Internet standards such as XML allow the easy identification of relevant data within data streams between interconnected systems, making these applications possible and practical.This type of automation is a fundamental component of modern business-to-business operations.

Peer to Peer Computing

An alternative to client-server architecture is peer-to peer architecture. Peer-to-peer architecture treats the computers in a network as equals, with the ability to share files and other resources and to move them between computers.With appropriate permissions, any computer on the network can view the resources of any other computer on the network, and can share those resources. Since every computer is essentially independent, it is difficult or impossible to establish centralized control to restrict inappropriate access and to ensure data integrity. Even where the integrity of the system can be assured, it can be difficult to know where a particular file is located and no assurance that the resource holding that file is actually accessible when the file is needed. (The particular computer that holds the file may be turned off.) The system also may have several versions of the file, each stored on a different computer. Synchronization of different file versions is difficult to control and difficult to maintain. Finally, since data may pass openly through many different machines, the users of those machines may be able to steal data or inject viruses as the data passes through. All of these reasons are sufficient to eliminate peer-to-peer computing from consideration in any organizational situation where the computers in the network are controlled by more than one individualor group. In other words, nearly always.

There is one exception: peer-to-peer computing is adequate, appropriate, and useful for the movement of files between personal computers or to share a printer in a small office or home network.

Peer-to-peer technology has also proven viable as an Internet file sharing methodology outside the organizational structure, particularly for the downloading of music and video. The perceived advantage is that the heavy loads and network traffic associated with a server are eliminated. (There are legal ramifications, also, for a server that is sharing copyrighted material illegally.) This technique operates on the assumption that the computer searching for a file is able to find another computer somewhere by broadcasting a request across the Internet and establishing a connection with a nearby computer that can supply the file. Presumably, that computer already has established connections with other systems. All of these systems join together into a peer-to-peer network that can then share files. One serious downside to this approach, noted above, is the fact that the computers in an open, essentially random, peer-to-peer network can also be manipulated to spread viruses and steal identities. There are several serious documented cases of both.

An alternative, hybrid model uses client-server technology to locate systems and files that can then participate in peer-to-peer transactions. The hybrid model is used for instant messaging, for Skype and other online phone systems, and for Napster and other legal file download systems.

Although there have been research studies to determine if there is a place for peer-to-peer technology in organizational computing, the security risks are high, the amount of control low, and the overall usefulness limited. The results to date have been disappointing.

Comments

Post a Comment